Apple has announced this week that it is going to start rolling out new child safety features. These features are coming later this year in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey. Apple says that this program is ambitious and protecting children is an important responsibility.

In this video, iCave Dave outlines the new child safety features which will start to appear later this year with iOS 15. Dave gives a good breakdown of how the new features will work and how well Apple is handling such a sensitive issue. There are three new ways that Apple will aim to protect children online.

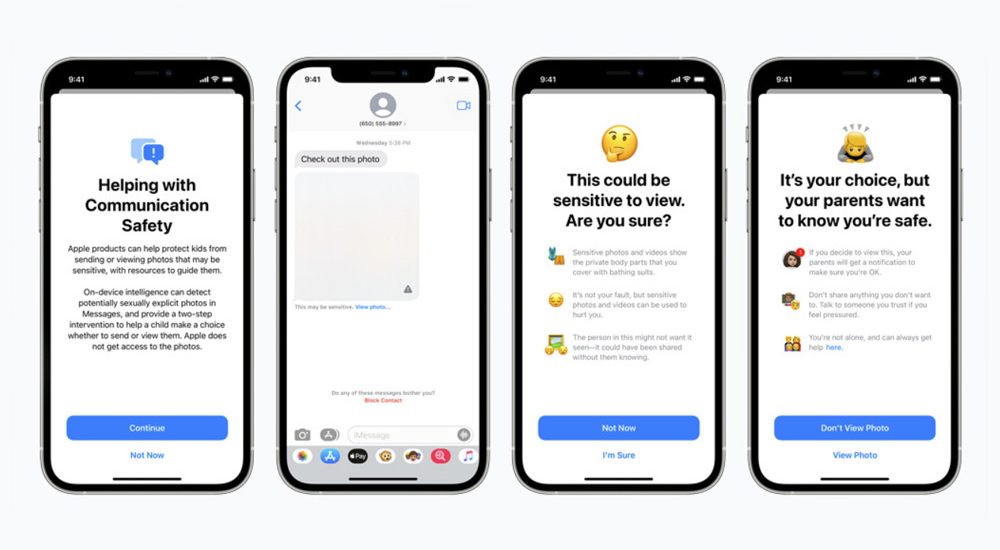

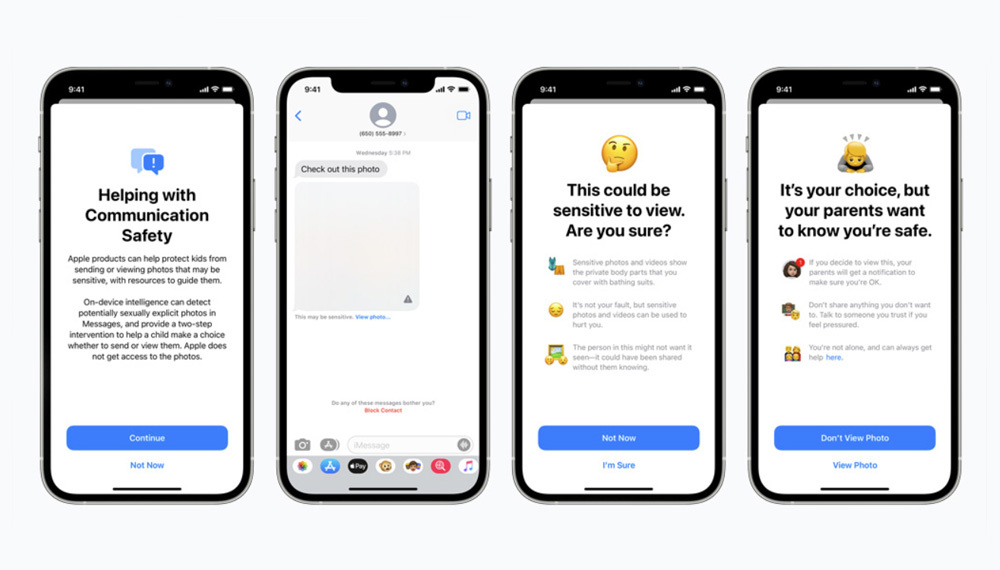

Safety in Messages

The message features will not be activated by default on all devices; they will need to be opted into for the children’s devices as part of a family on your Apple devices. This is what Apple has to say on the functionality of the protection for children coming to the Messages app as part of IOS 15:

The Messages app will add new tools to warn children and their parents when receiving or sending sexually explicit photos. When receiving this type of content, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. As an additional precaution, the child can also be told that to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent, and the parents can receive a message if the child chooses to send it.

New Guidance in Siri and Search

There will also be Siri warnings in place if a user tries to search for images of Child Sexual Abuse Material (CSAM). This is how Apple says these features will work:

Apple is also expanding guidance in Siri and Search by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.

I think these features sound like an excellent way to help protect children online.

CSAM Detection

Finally, the most contentious feature Apple is rolling out involved the on-device scanning of all images before they are backed up on your iCloud account. The images are still encrypted, so Apple still can’t see your images. They will simply be flagged if markers on a user’s image match the same markers in the database at the National Center for Missing and Exploited Children. Here’s what Apple has to say on this feature:

New technology in iOS and iPadOS will allow Apple to detect known CSAM images stored in iCloud Photos. This will enable Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC).

Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices.

This innovative new technology allows Apple to provide valuable and actionable information to NCMEC and law enforcement regarding the proliferation of known CSAM. And it does so while providing significant privacy benefits over existing techniques since Apple only learns about users’ photos if they have a collection of known CSAM in their iCloud Photos account. Even in these cases, Apple only learns about images that match known CSAM.

Concerns Over This Technology

It would be hard for anyone to fault Apple for making changes to protect children online and report images of CSAM. I completely agree with iCave Dave on the handling of these types of images and content of that nature. It seems as though Apple is handling the protection of children in a considered and appropriate way.

Personally, I’m inclined to agree with some critics of the image-scanning technology and the precedent it sets. While we would all agree that the production and sharing of CSAM images is simply wrong. The issue that comes when scanning images is when reporting users is appropriate, where should the line be drawn? Should images of drug use be flagged? Some would say they absolutely should. What about terrorism, would that be defined by the government of each territory? In the West, we’re probably okay, but other parts of the world might have different definitions of “terrorist.” Who would decide what should be reported and to whom it is reported?

I think we all agree that the types of images being discussed in this video and specifically mentioned by Apple are bad, perpetrators should be flagged, reported, and the world would be a better place if these types of images were not being produced or shared. I am yet to see anyone arguing in defense of CSAM images. However, I do believe there is a discussion to be had around any further use of this technology. What about countries where homosexuality is illegal, is it a possible future outcome that images of consenting adults doing something the government doesn’t approve of get flagged and reported? This might seem like an unlikely possibility, but with the precedent this technology sets, it is a possible eventuality.

Would governments with questionable ethics in the future be able to leverage Apple into flagging images they dictate in order to keep selling iPhones in that country? I believe, with how focused Apple currently is on customers and their privacy, it’s unlikely to be an issue anytime soon.

Google and Facebook have been scanning uploaded images for this type of content for a number of years. Apple is now going to go it on the device. Does this detract from Apple’s previous statement that “privacy is a human right”?

A cynic might say that this technology is being introduced in the interest of protecting children because that’s a very difficult subject for anyone to disagree with.

What are your thoughts on Apple scanning users’ images? Are critics of the technology overreacting? Should a service provider be able to check anything stored on their servers? How would you feel if Adobe started scanning images on Creative Cloud or your Lightroom library for specific image types?

Let me know in the comments, but please remember to be polite, even if you disagree with someone’s point of view.